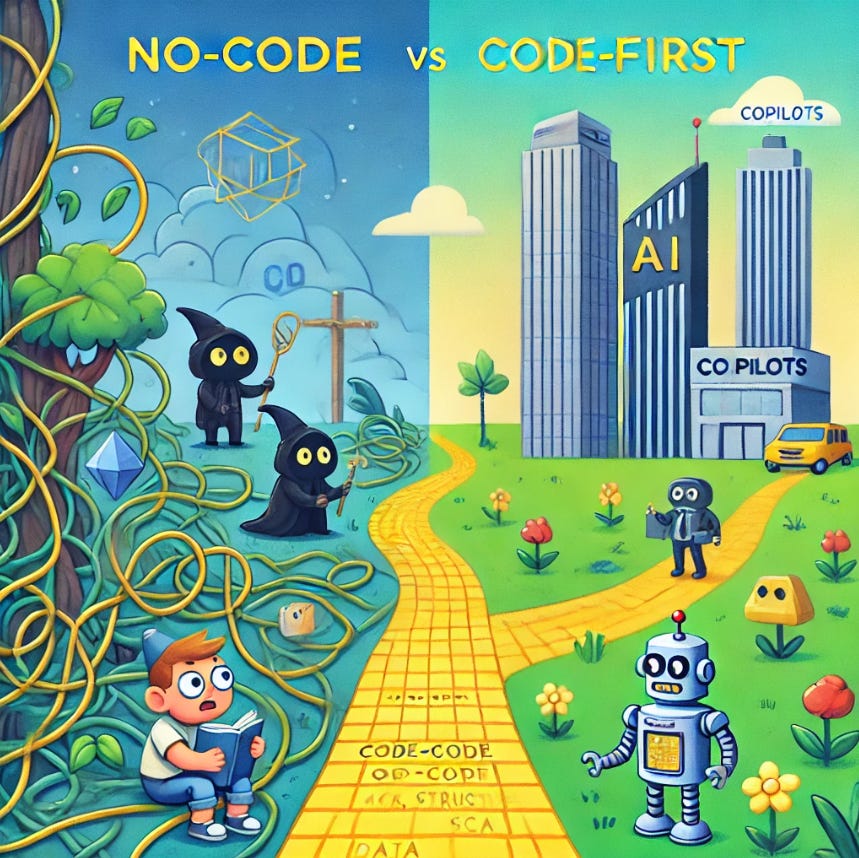

What we learned from building a no-code data stack. And why we changed course.

How AI copilots solve what no-code apps simply cannot

I initially shared this with fellow YC founders on Bookface, but the lessons felt too important not to share more broadly.

One of the most painful and persistent truths in software is that complexity compounds. Every decision you make at the beginning becomes a foundation, and when that foundation is flawed, every subsequent layer is harder to build and maintain. Nowhere is this more evident than in the modern data stack (a.k.a. MDS).

When we started Structured, we set out to solve a problem that seemed, at first glance, straightforward. Businesses have data locked in different systems: CRMs, product analytics platforms, billing tools, and more. To answer even basic business questions, they needed to extract and combine data from these silos. The tools built into these platforms weren’t flexible enough, so businesses were forced to choose between two bad options:

Build a Modern Data Stack: Hire data engineers, set up a warehouse, ETL pipelines, orchestration, and BI tools. This is expensive, time-consuming, and requires specialized skills that many companies just don’t have.

Manually Export Data: Analysts export CSVs from different tools, manually clean and combine the data, and create one-off models in Excel. This is faster and cheaper upfront, but it doesn’t scale and is prone to errors, bottlenecks, and institutional “tribal knowledge.”

Neither of these options was ideal. The first came with massive overhead; the second created fragile, unsustainable results. We thought the solution was to build a tool that combined the best of both worlds: the power and rigor of the modern data stack, but simplified for non-technical users.

The Low-Code/No-Code Bet

Our approach was ambitious. We wanted to build a tool that handled every step of the data pipeline — data ingestion, modeling, schema mapping, metric definitions, and visualization — all through an intuitive and simple no-code SaaS interface. Our vision was to empower analysts, ops teams, and business users to do what once required a full data engineering team.

To make it even better, we integrated AI every step of the way. We used it to suggest schema mappings, generate metric definitions, and recommend dimensions and measures. In theory, this should have made it easier for non-technical users to build a robust analytics stack.

For simple use cases, the tool worked beautifully and felt like magic. But, as we built and iterated and onboarded use-cases, we uncovered deep structural flaws in this approach. These learnings have been pivotal in shaping our current belief that low-code/no-code solutions are fundamentally misaligned with the challenges of real-world data complexity.

Automation vs. Flexibility: The Low-Code Trade-Off

Every user interface, no matter how clever, makes simplifying assumptions. Those assumptions might hold for small or generic use cases, but as soon as you encounter edge cases, things that make your business unique, they fall apart.

Example Use-Case: Schema Mapping

For companies with just a few tables, a drag-and-drop schema mapping interface worked well.

For companies with thousands of tables and tens of thousands of relationships, the UI became unwieldy. Representing complex relationships and edge cases visually was simply infeasible.

Worse, the assumptions baked into the UI (e.g., automatic joins or mappings) often produced errors or failed to capture nuanced business logic, forcing users into manual, cumbersome workarounds..

This pattern repeated across every part of our tool. Metric definitions, data transformations, visualizations… each one required us to make assumptions to simplify the interface. And in doing so, we were constantly butting up against the same problem: to truly solve real-world complexity, you need flexibility. But flexibility is the enemy of simplicity in a UI.

💡 Lesson Learned: The illusion of simplicity introduces hidden complexity.

To make the system accessible, we relied heavily on AI to automate tasks like schema mapping, metric generation, and visualization recommendations. While this automation worked for simple use cases, it came at the cost of flexibility. AI-generated mappings often made incorrect assumptions, requiring manual corrections. However, the UI lacked the tools to refine these mappings at scale. Users had to follow predefined paths for setting up pipelines. Deviating from these paths to handle edge cases was either impossible or required workarounds that broke the system’s abstractions. While the system worked well for 80% of use cases, the remaining 20% caused significant frustration, as users had no way to customize or extend the system to meet their needs. Users need tools that let them override defaults and customize workflows to reflect their unique requirements.

Why Code Wins

Code, by contrast, thrives on complexity. A well-designed API or scripting interface allows users to express exactly what they need, without the constraints of a UI. More importantly, code comes with decades of hard-earned best practices—version control, CI/CD, testing frameworks—that make it robust and scalable.

Low-code tools actively strip away the scaffolding that makes software engineering actually powerful. Without version control, you can’t track changes. Without CI/CD, you can’t ensure reproducibility. Without proper testing, you can’t catch errors before they break something downstream. All of this is second nature in code-first systems, but it’s missing in UI-driven tools.

The best software doesn’t just make things easy to use generally. It makes it easy to do the right thing. Low-code tools promise ease of use, but in practice, they make it harder to follow best practices. And as soon as you hit an edge case, they force you into workarounds that compound complexity over time.

A Unified Control Plane

The other major problem with the modern data stack is fragmentation. Every tool (ETL, transformation, visualization) has its own interface, its own assumptions, and its own configuration. This creates a fractured system where the business logic is scattered across multiple tools, making it impossible to have a single source of truth.

The ideal solution is a unified control plane. A system where the configuration, business logic, and data flows are defined in one place, even if the underlying tools are interchangeable. This requires a code-first approach because only code can express the full nuance of a business’s needs while remaining portable across different systems. This doesn’t necessarily mean replacing every tool in the stack. Rather, we should be centralizing the logic and letting the underlying tools execute their specialized functions.

A code-first control plane allows for:

Flexibility. Users can define complex business logic without being constrained by UI limitations.

Portability. By separating configuration from execution, you can swap out tools without losing the integrity of their workflows.

Transparency. With everything defined in code, it’s easier to audit, version, and reproduce the system.

Composable Architecture: Decoupling Engines from Logic

To future-proof the stack, you need a composable architecture that decouples components from the control plane. This approach helps you to swap out individual tools without disrupting workflows or losing institutional knowledge:

ETL. Replace one ETL tool with another (e.g., from Fivetran to Airbyte) while preserving pipeline definitions in the control plane.

Transformation. Migrate from SQL-based transformations (e.g., dbt) to a distributed engine (e.g., Spark or Flink) with minimal disruption.

Visualization/Reporting. Integrate new BI tools or APIs without redefining metrics or duplicating configuration.

Plugin-Based Architecture

Define plugins for each layer of the stack (e.g., Fivetran for ingestion, dbt for transformations, Tableau for visualization).

Get these plugins to adhere to a standard API defined by the control plane.

Abstracted Execution Layers

Ingestion: Standardize source connectors via frameworks like Singer or Airbyte to allow for reusable ETL pipelines.

Transformation: Compile transformations into execution plans that can run on multiple backends (e.g., SQL engines, Spark, Flink).

Visualization: Expose metrics and transformations via APIs so they can be consumed by any visualization tool.

Interoperability

Use open standards like OpenLineage or dbt’s manifest.json to get compatibility across tools.

Build adapters to translate business logic into tool-specific configurations.

The Copilot Revolution

Here’s where the story gets interesting. For the past decade, SaaS tools have optimized for non-technical users by abstracting complexity into UIs. But now, coding copilots (like GitHub Copilot and similar tools) are changing the game.

One of the criticisms of code-first systems is that they can be intimidating to non-technical users. But with the right combination of intelligent defaults and copilot guidance, even complex systems can feel approachable. For example:

Intelligent Defaults. Preconfigure pipelines, transformations, and visualizations based on best practices and the user’s specific context.

Interactive Feedback. Use copilot-driven suggestions and live previews to make every part of the system interactive and understandable.

No Dead Ends. Ensure that every part of the system can scale. If a user outgrows the default settings, the system should guide them to more advanced options without requiring a complete overhaul.

The End of Low-Code

The lesson we’ve learned is that simplicity isn’t about hiding complexity.. It's about managing it effectively. Low-code tools fail because they obscure the underlying complexity, forcing users to deal with it when things inevitably break. Code-first tools, with the right scaffolding, allow you to embrace complexity without being overwhelmed by it. With AI copilots, code-first architectures, and unified control planes, we can finally create data stacks that are both powerful and approachable.